[Michel Bitbol]: Thank you, it’s going to be a very short question, but first of all, I would like to say something about your work. I thought that your paper, the paper you sent me, was a wonderful paper, and I think your talk was really a wonderful talk, so thank you, first of all.

Second of all, so, very short question. Is this nonpersistence model simply the field theoretic model?

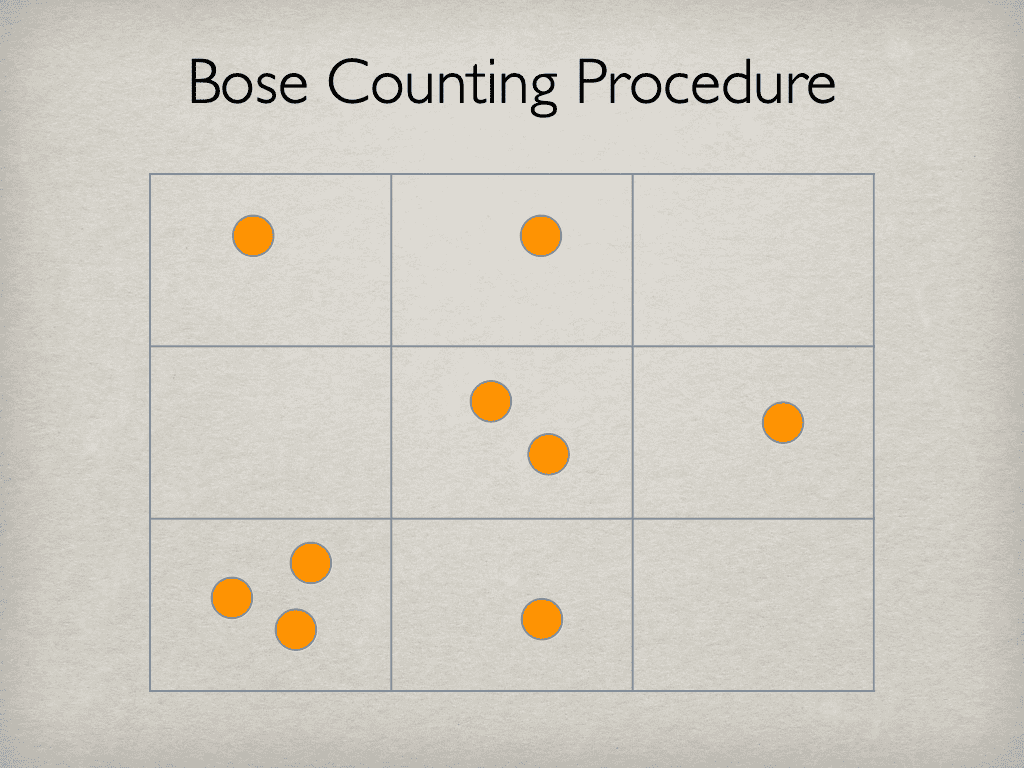

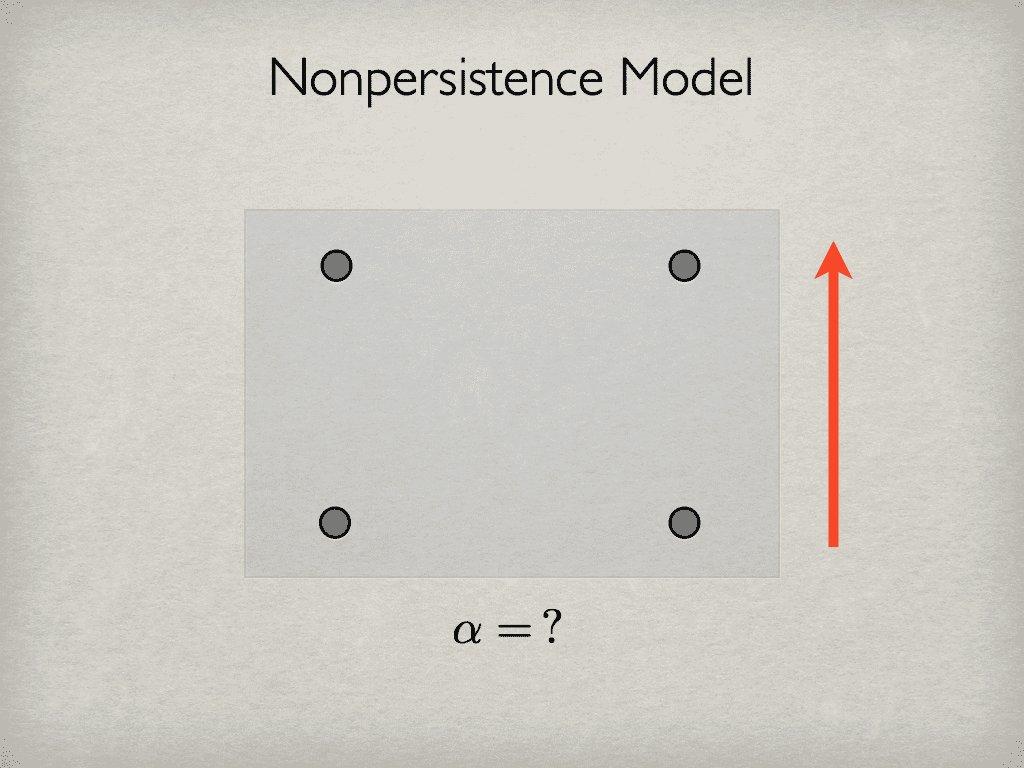

[Philip]: That’s a great question. The way I see it is the following. In a field theoretic model, the basic concept is Fock space. So in other words, all we care about is how many excitations are there, as it were, in each cell. So on the surface it looks like, “Oh, this is just a nonpersistence model.”

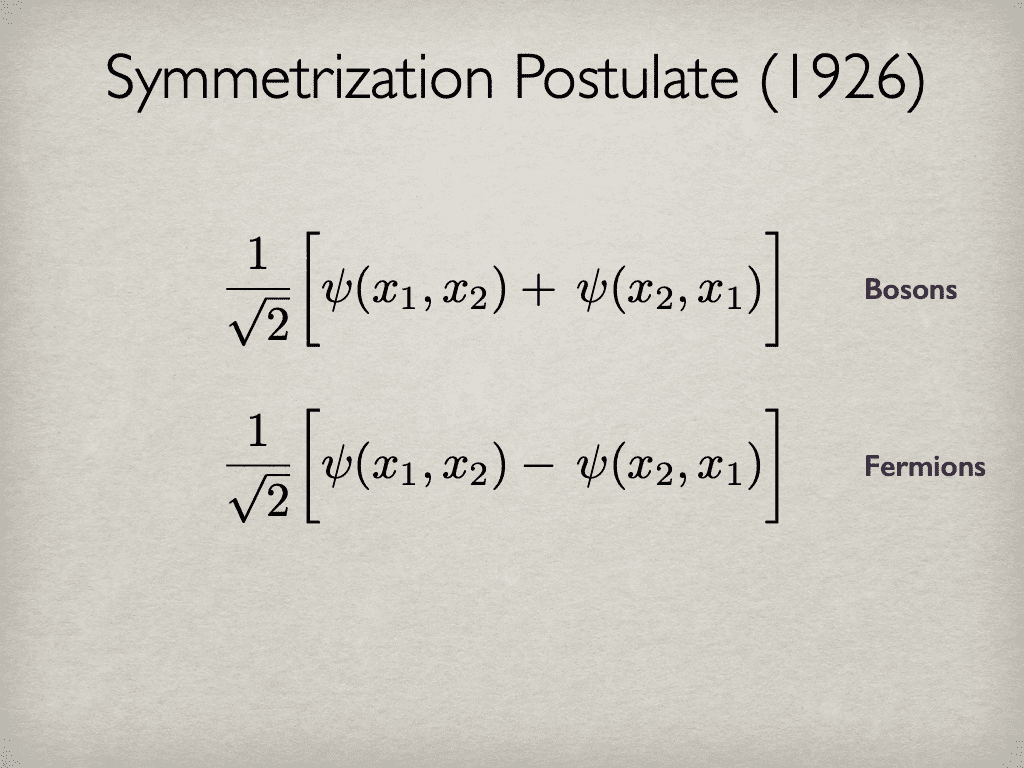

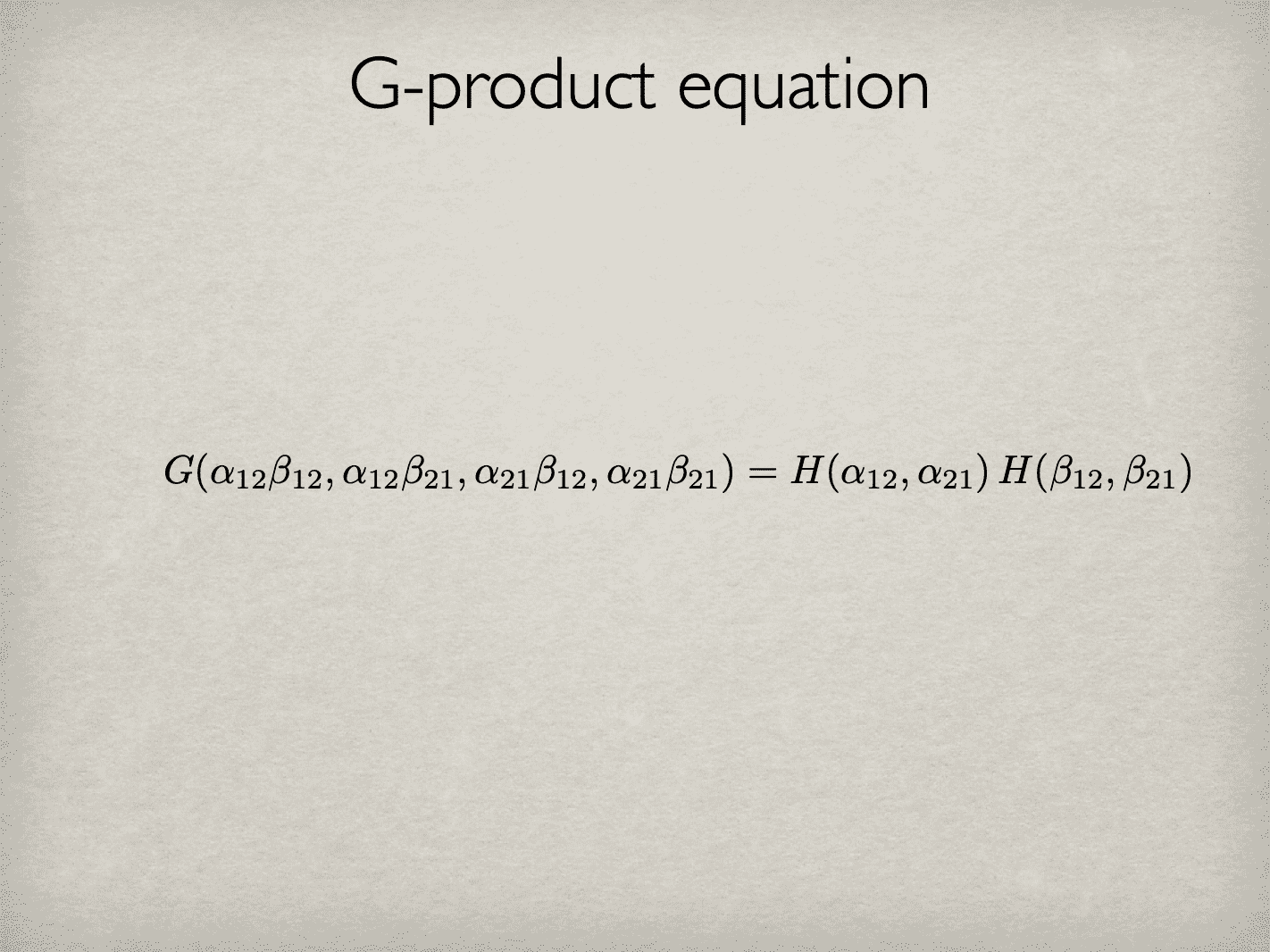

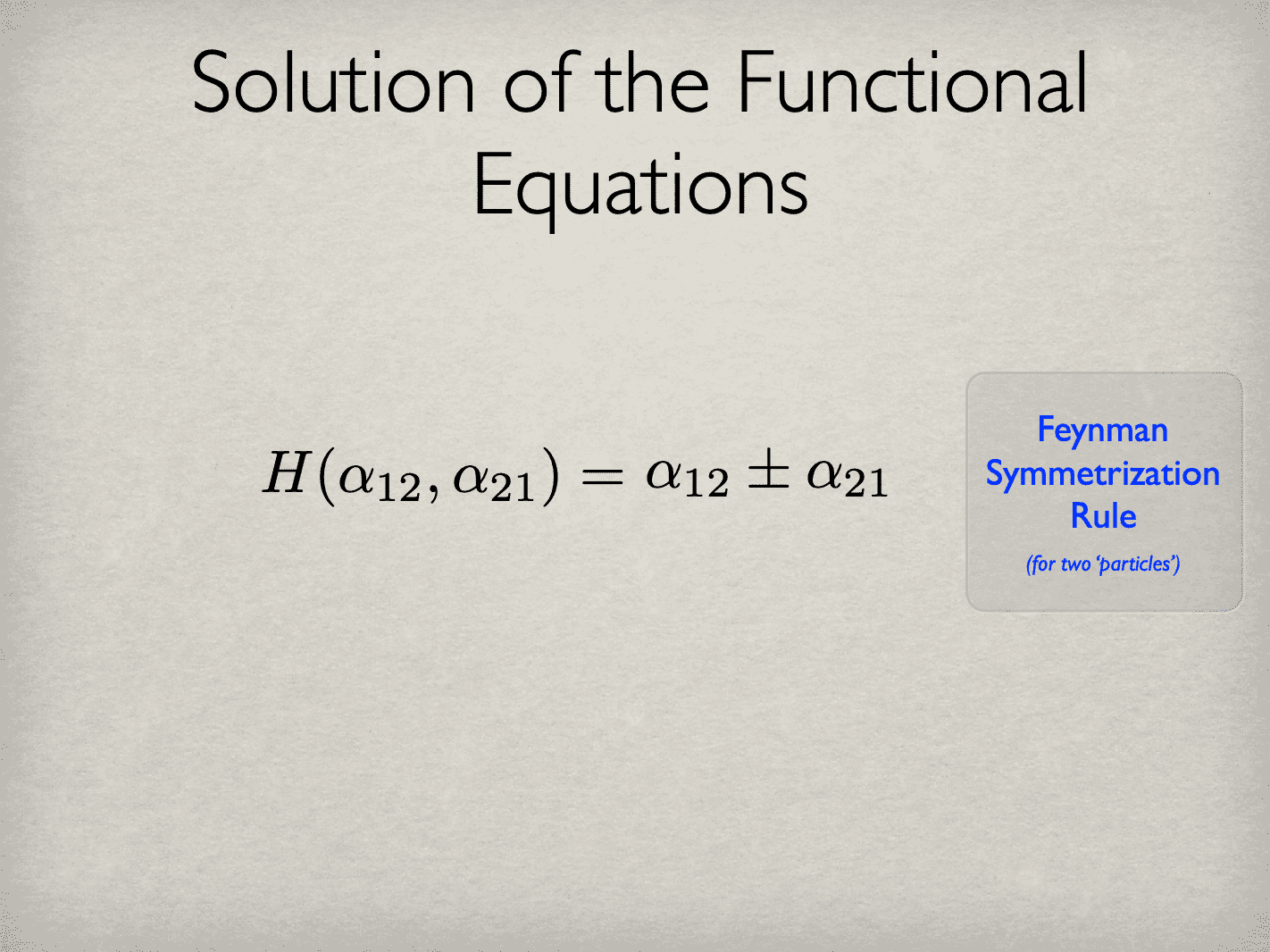

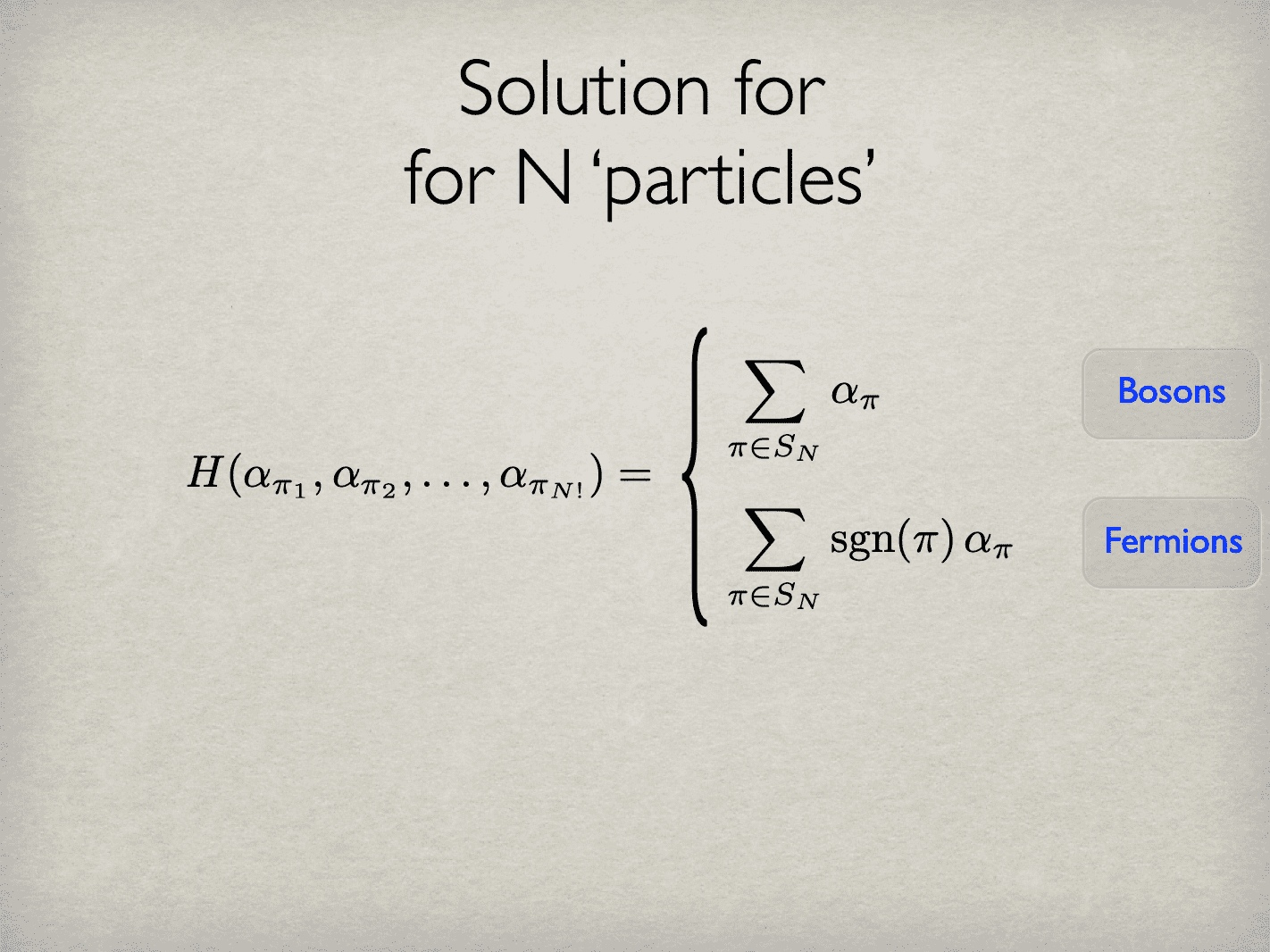

However, you then assume the formal structure of this commutation and anti-commutation relationships which bring in the fermionic and bosonic behaviour.

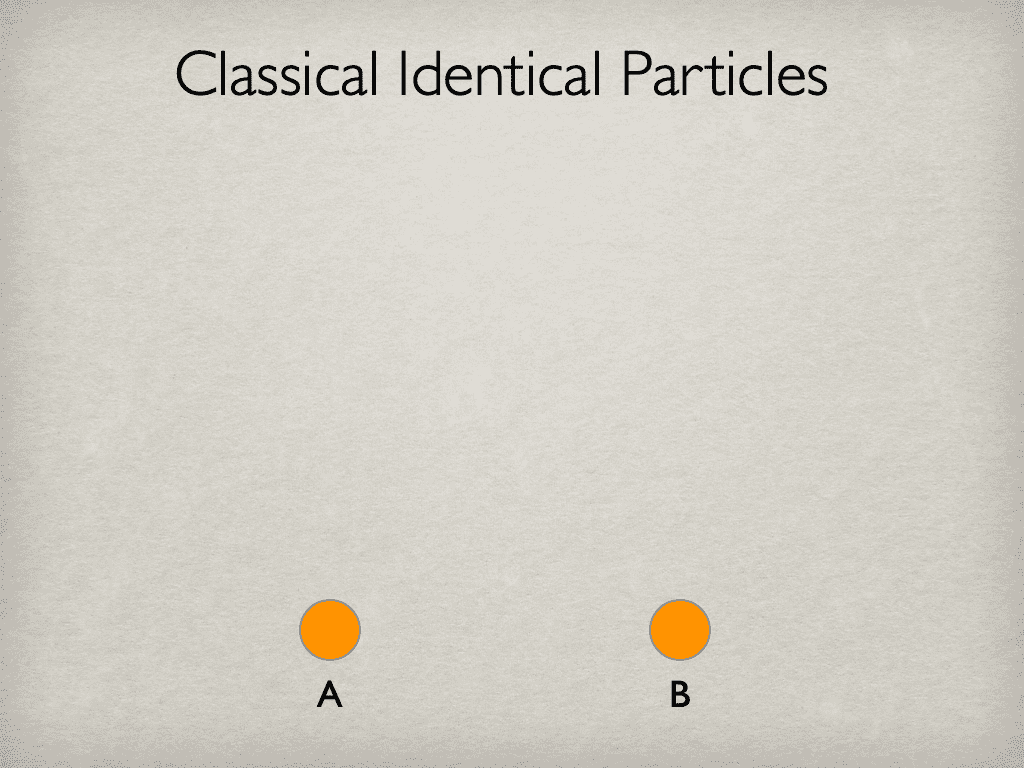

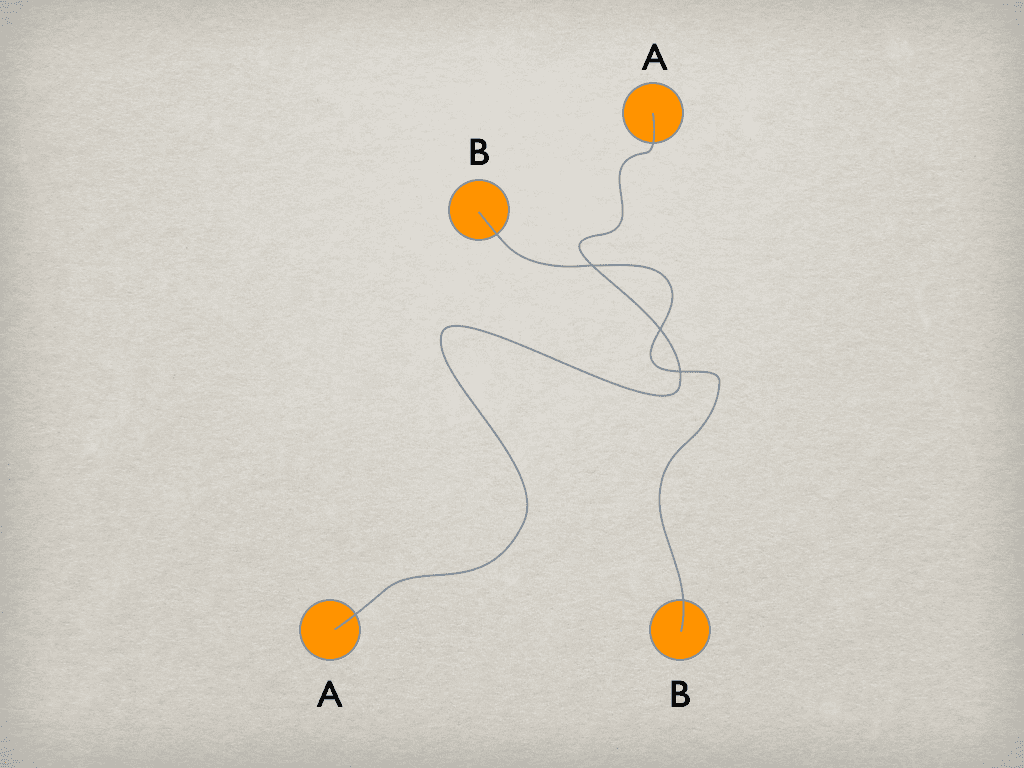

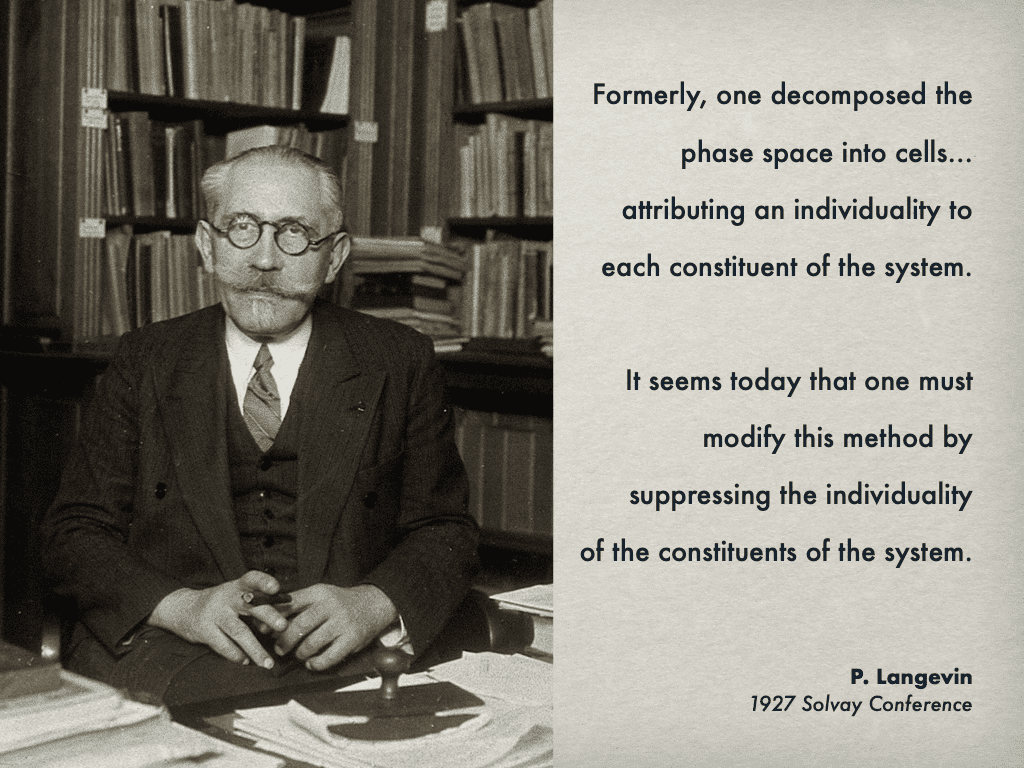

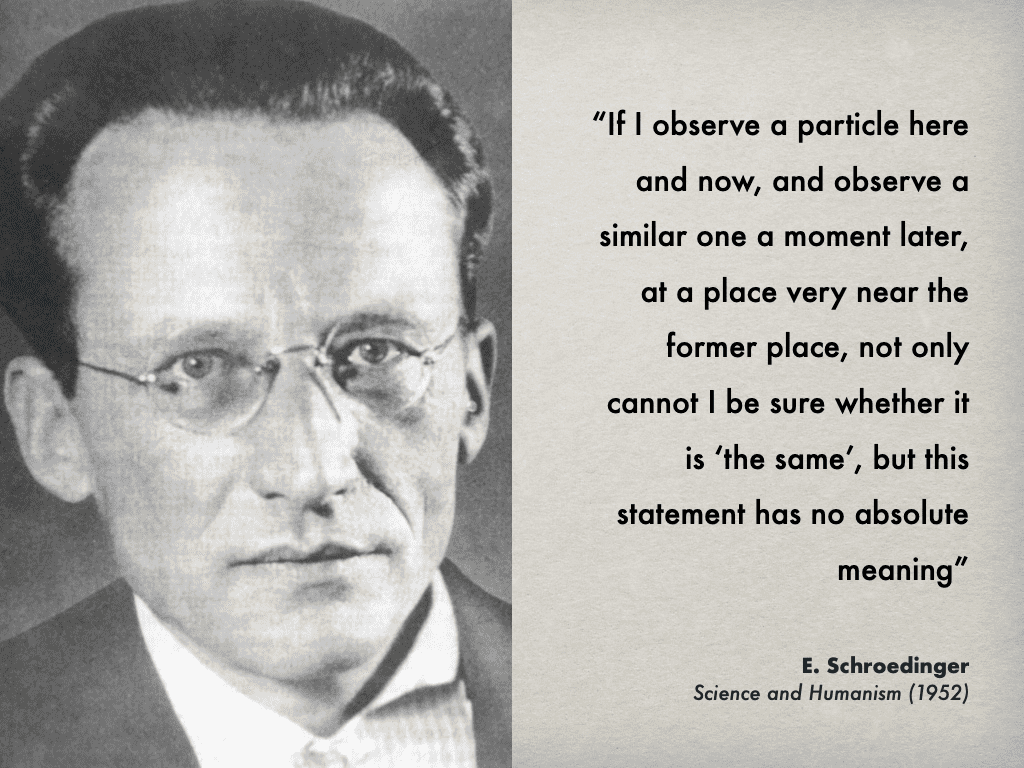

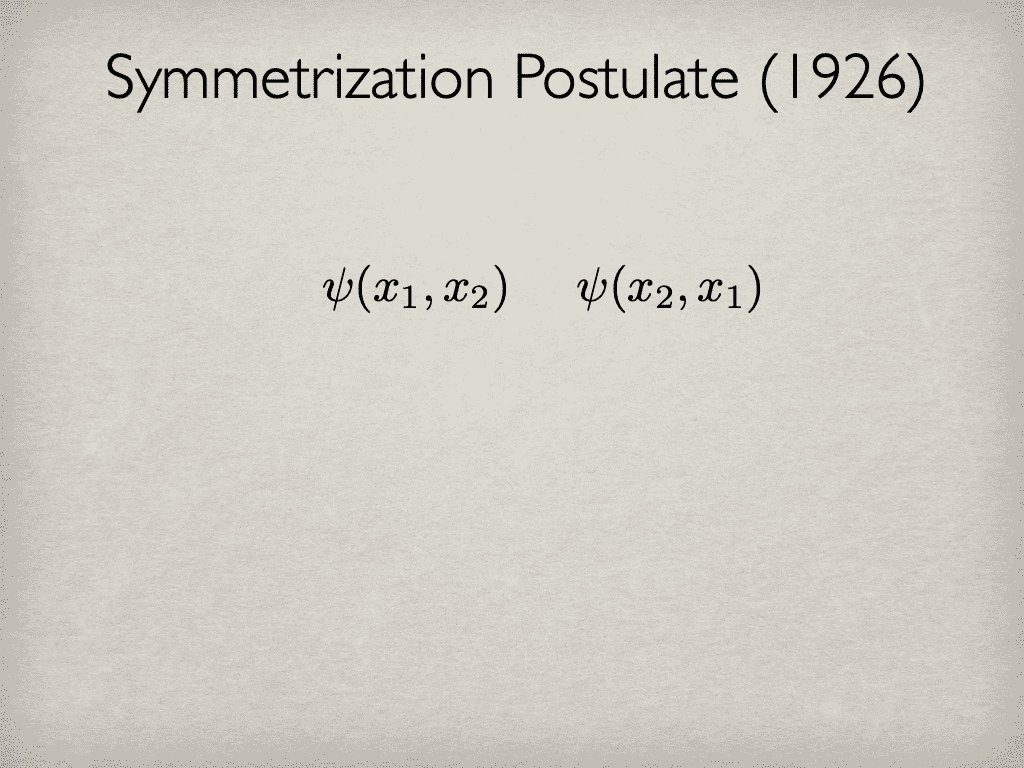

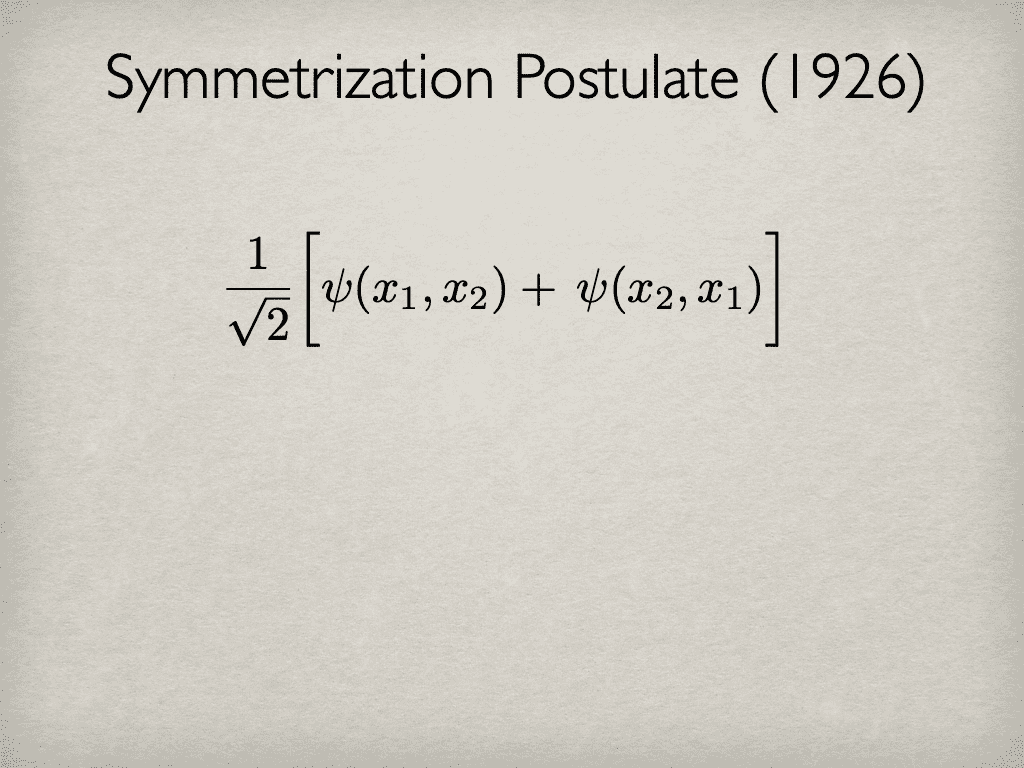

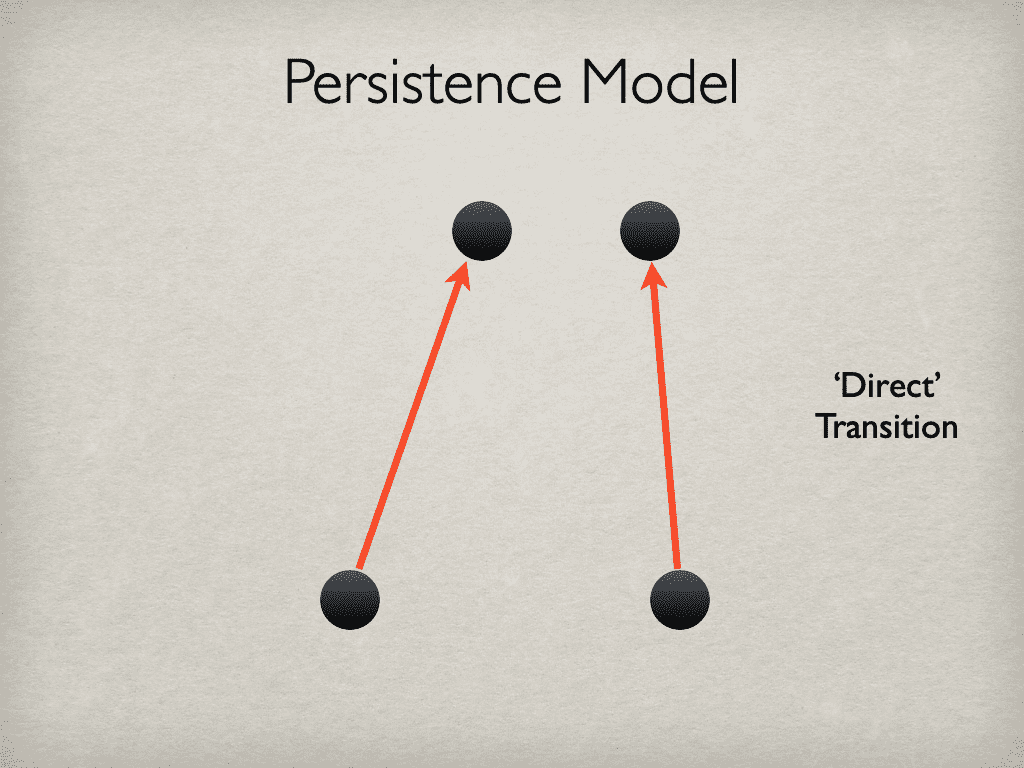

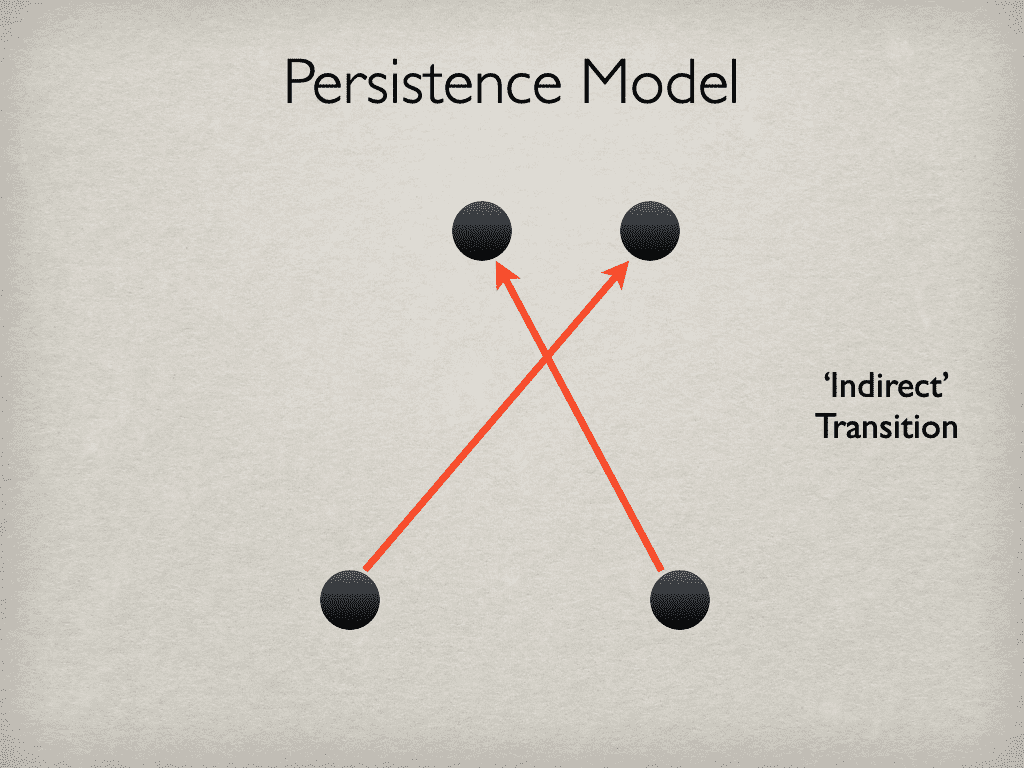

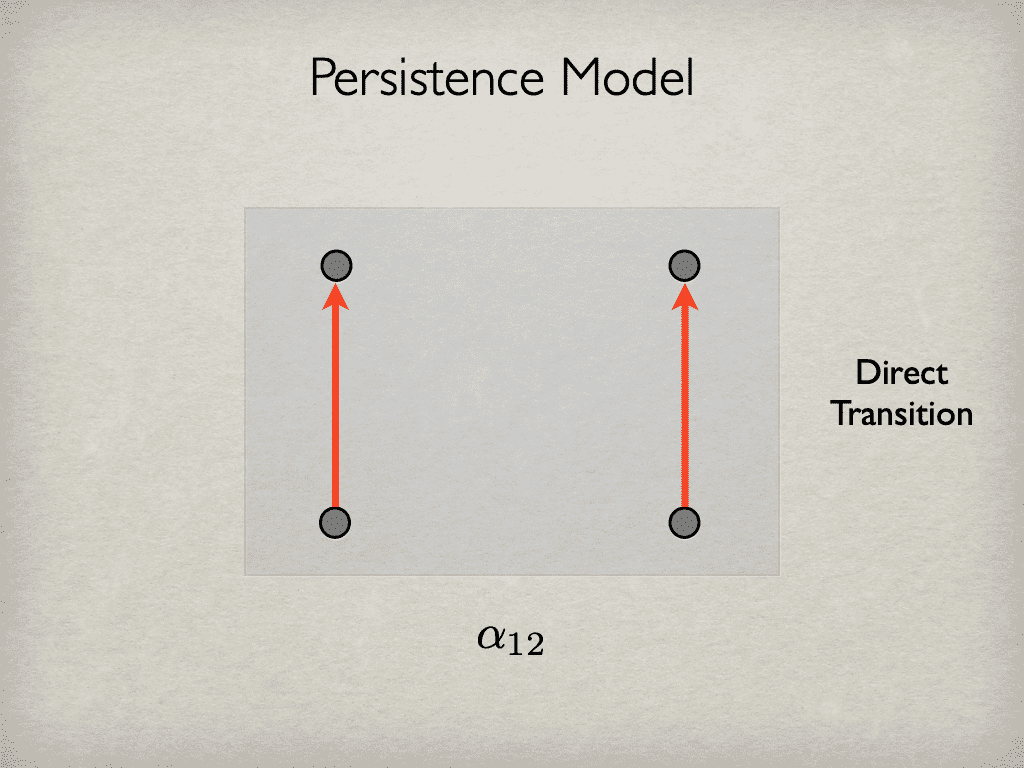

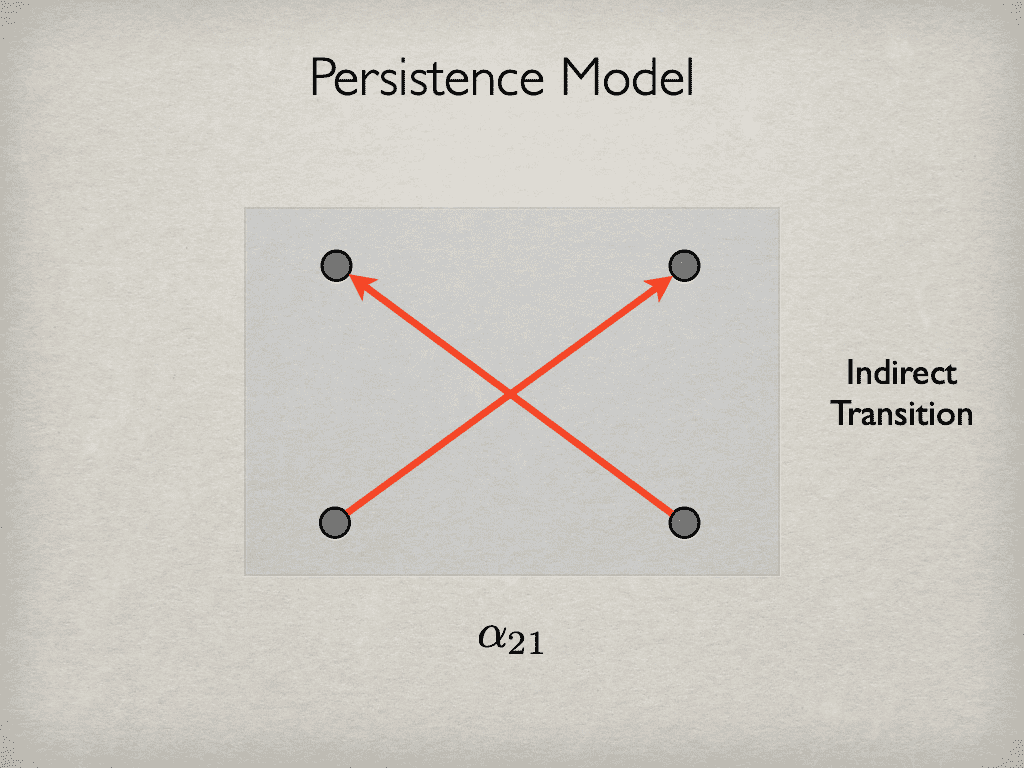

And so the question is: what does that formalism mean? And so we have here a classic situation, where if you look at non-relativistic [quantum] physics, and the symmetrization postulate, the natural reading of the formalism of the symmetrization postulate is to say the identical particles are persistent, but not re-identifiable.

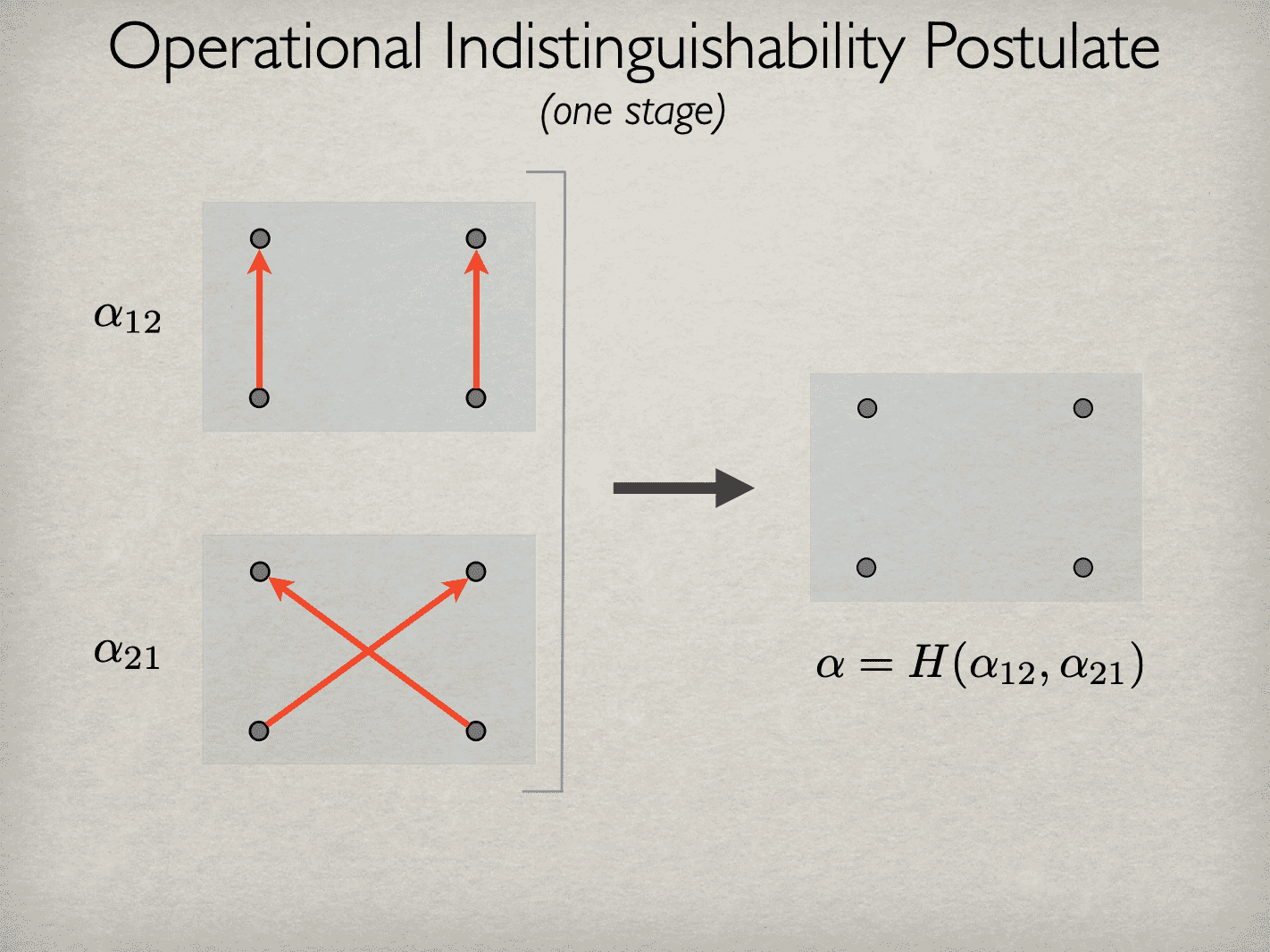

But there’s actually a one-to-one correspondence between a quantum field theory of a constant number of particles, and the non relativistic formalism. So you’ve got here a situation with two formalisms, that actually that map to one another, can be naturally read in two different ways. And you can see here the danger of reading the formalism.

So what happens with a quantum field theory formalism is you see these quanta, and you think, “Oh, this is nonpersistence,” but then you forget about explaining, or accounting for, the commutation and anti-commutation relations. And that’s where we smuggle in, from my point of view, the persistence model.

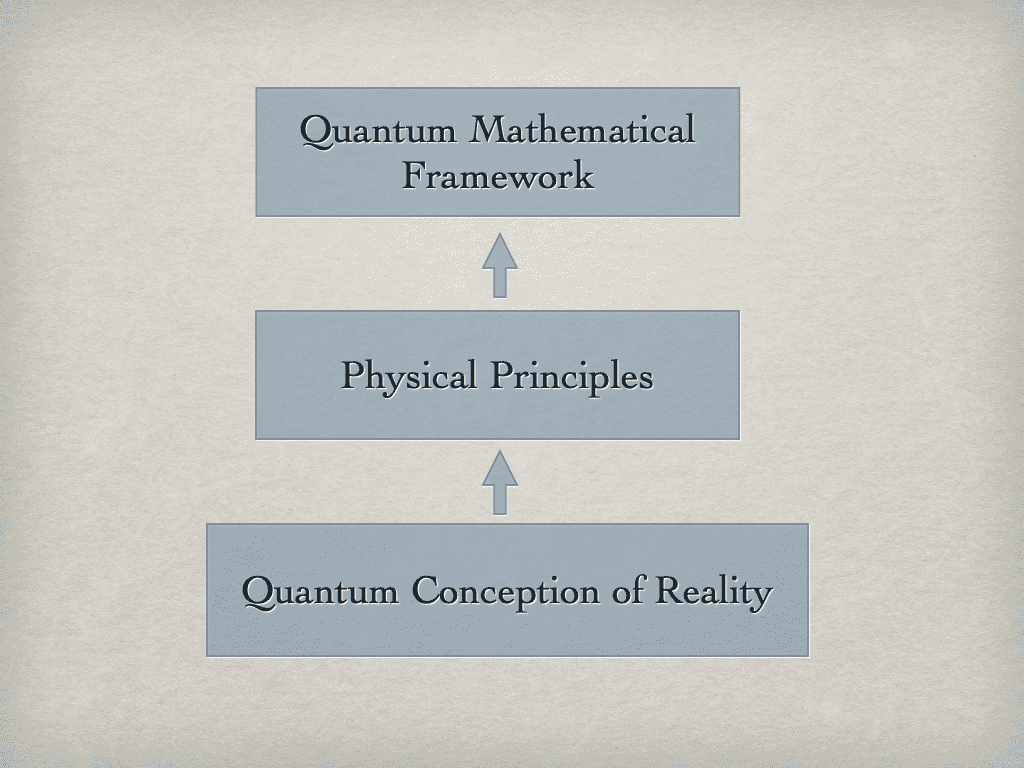

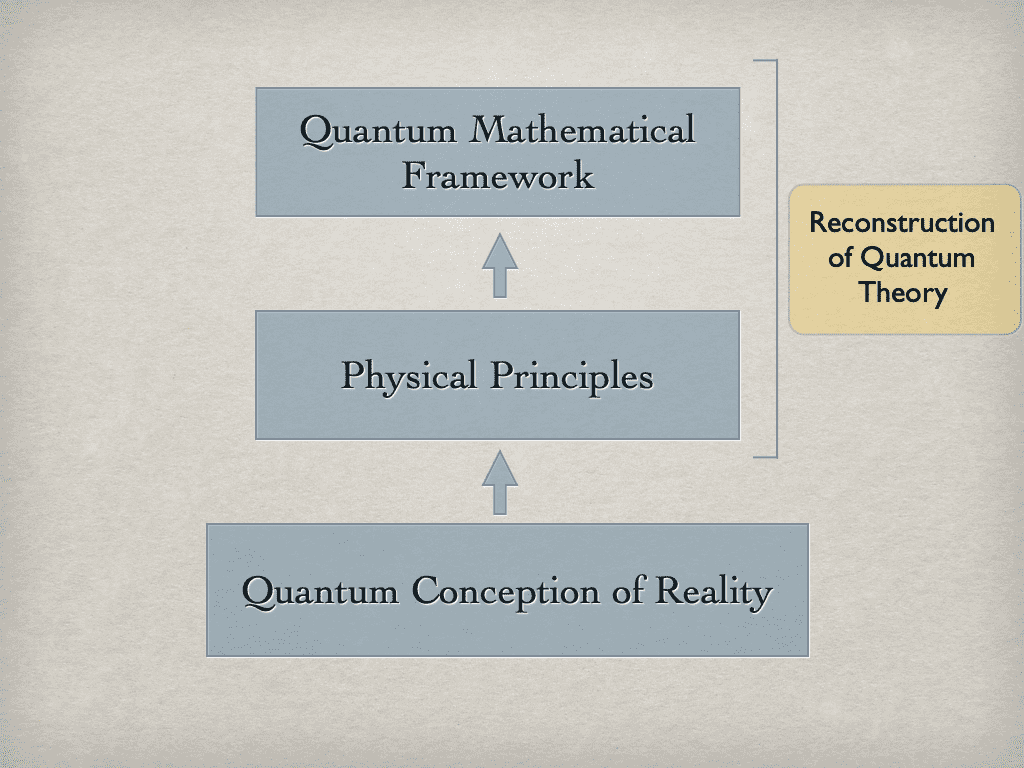

[Philipp Berghofer]: I guess I was just thinking about your talk, and I personally should think to emphasise, that I think that that this project of reconstructing physics, reconstructing quantum physics… quantum mechanics… is really super interesting, I think, from a phenomenological point of view, particularly if we consider that from a phenomenological viewpoint, what you’re going do with this kind of fieldwork that was mentioned by Harald [Wiltsche].

So, secondly, do you think that the work you are doing also has some direct consequences concerning our picture of quantum field theory. So, how would so consider the relationship between quantum mechanics and quantum field theory?

‘Cause if you think about it, particles from the perspective of classical mechanics, versus the perspective of quantum field theory, then you’ve mentioned this briefly, particularly if you subscribe to the field interpretation, according to which the fundamental objects are not particles but fields, and particles are accelerations of fields and so on, they we would say, “Okay, we should subscribe to the—” (movement drowns out speaker)…according to which it is not some solid stuff, but is sitting over time, more as an analogy to a wave in the sea (inaudible)…one particular wave over that time.

I’m a little confused. So how do you estimate the relationship between quantum mechanics and quantum field theory according to your view?

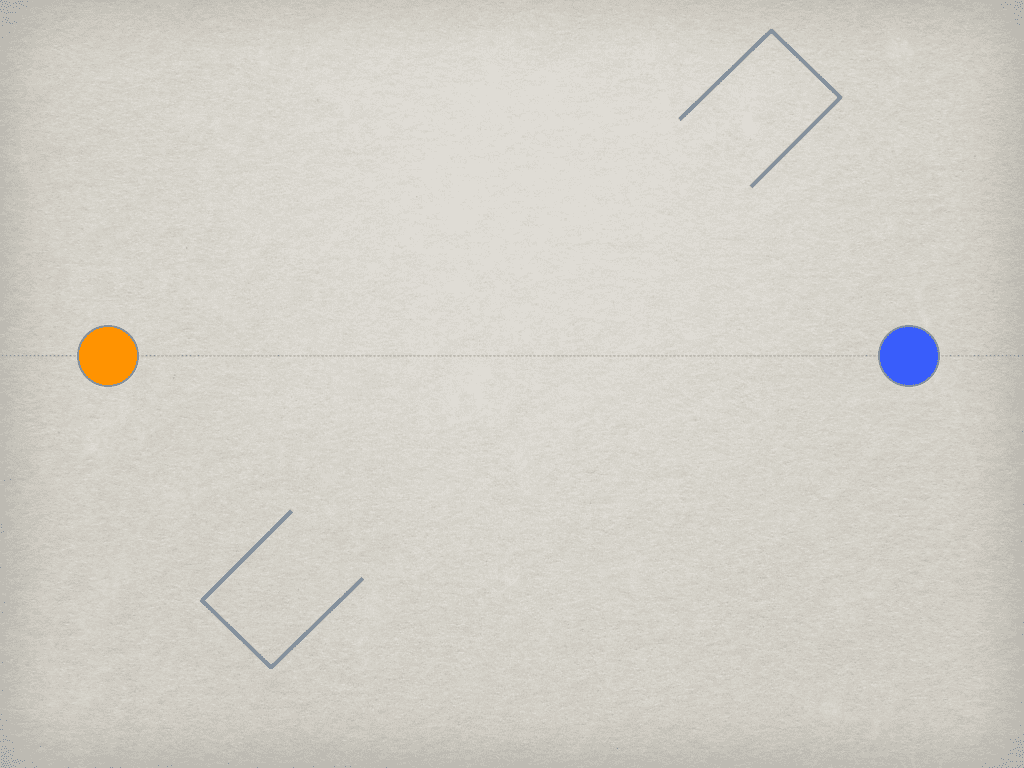

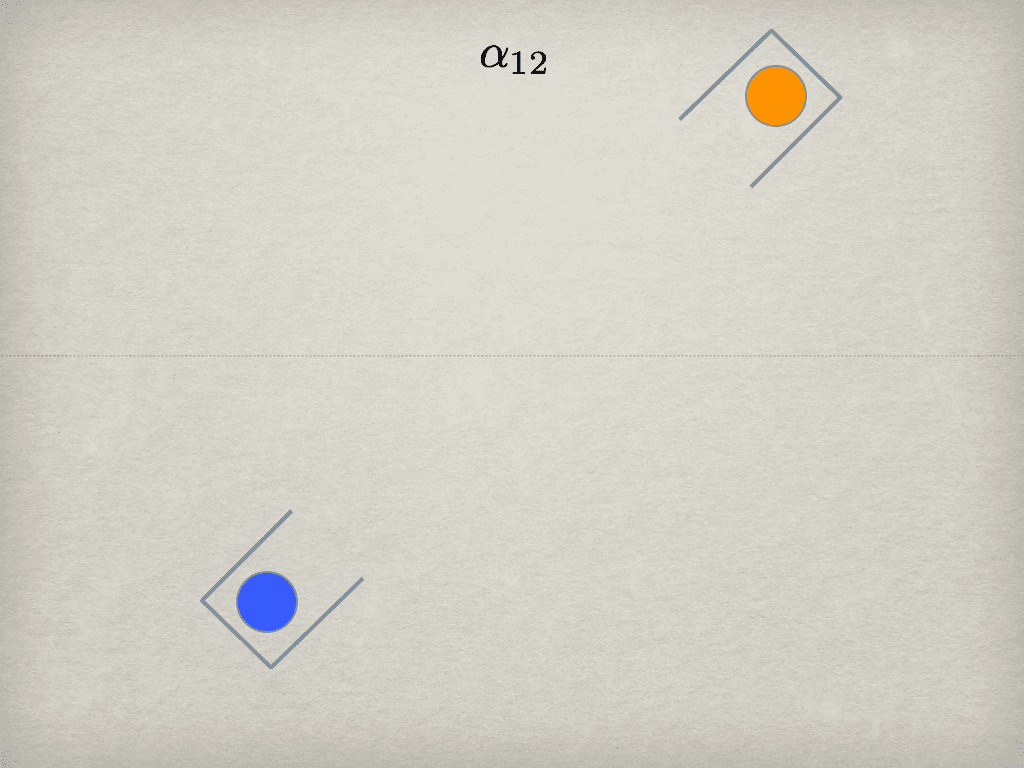

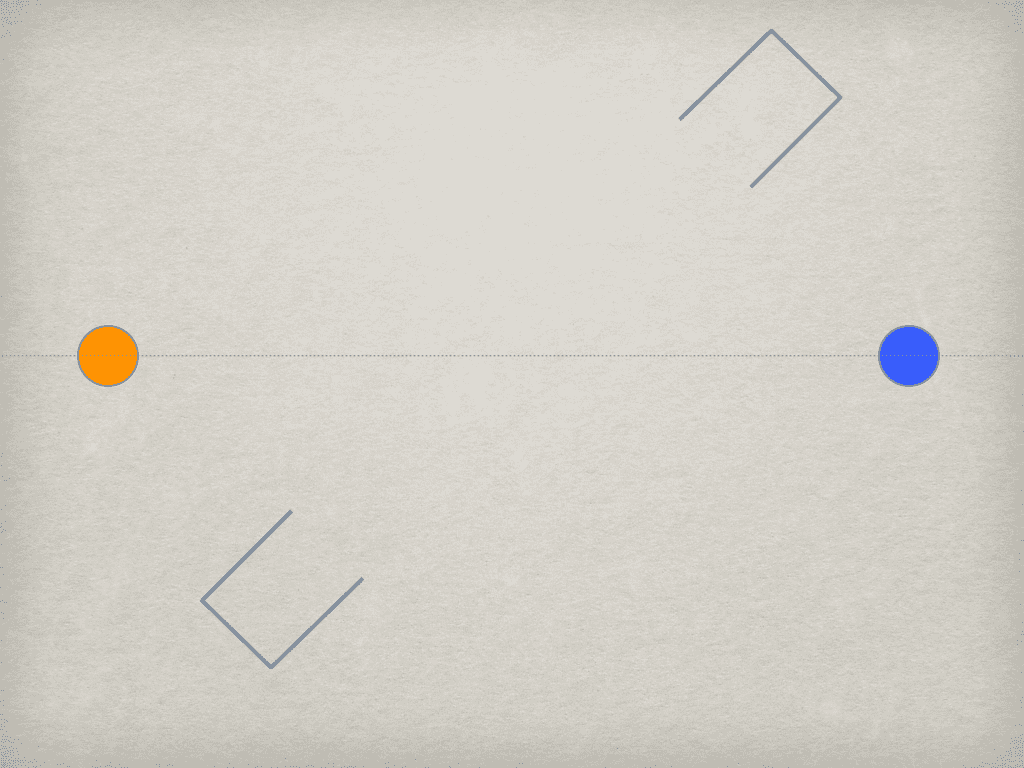

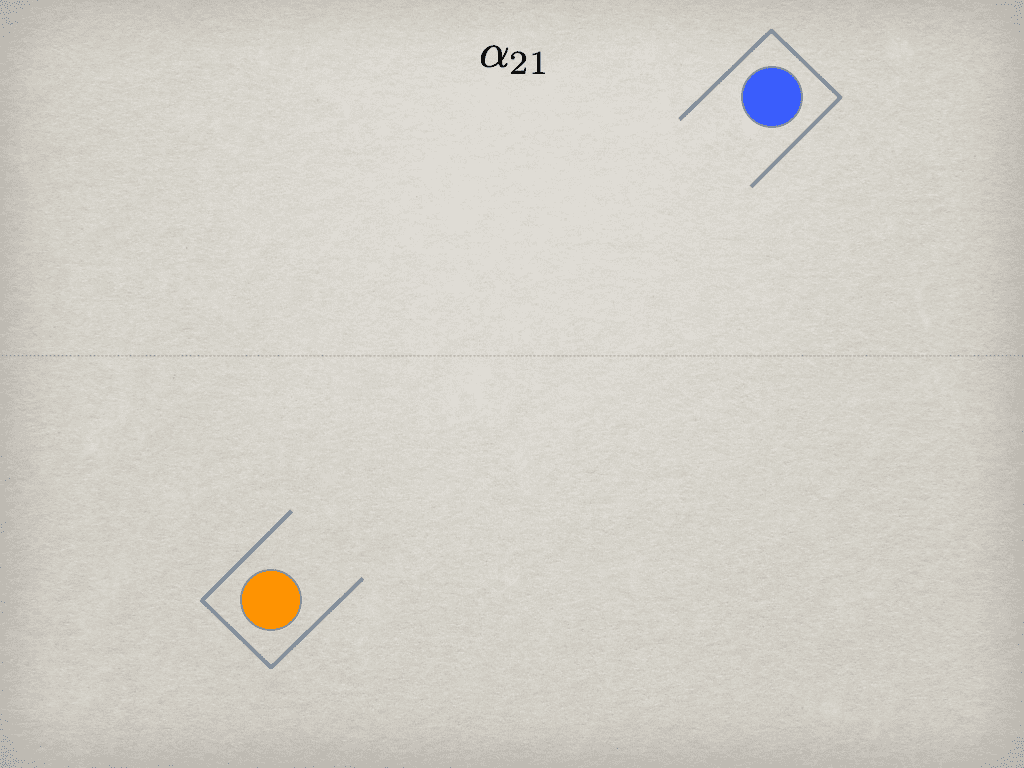

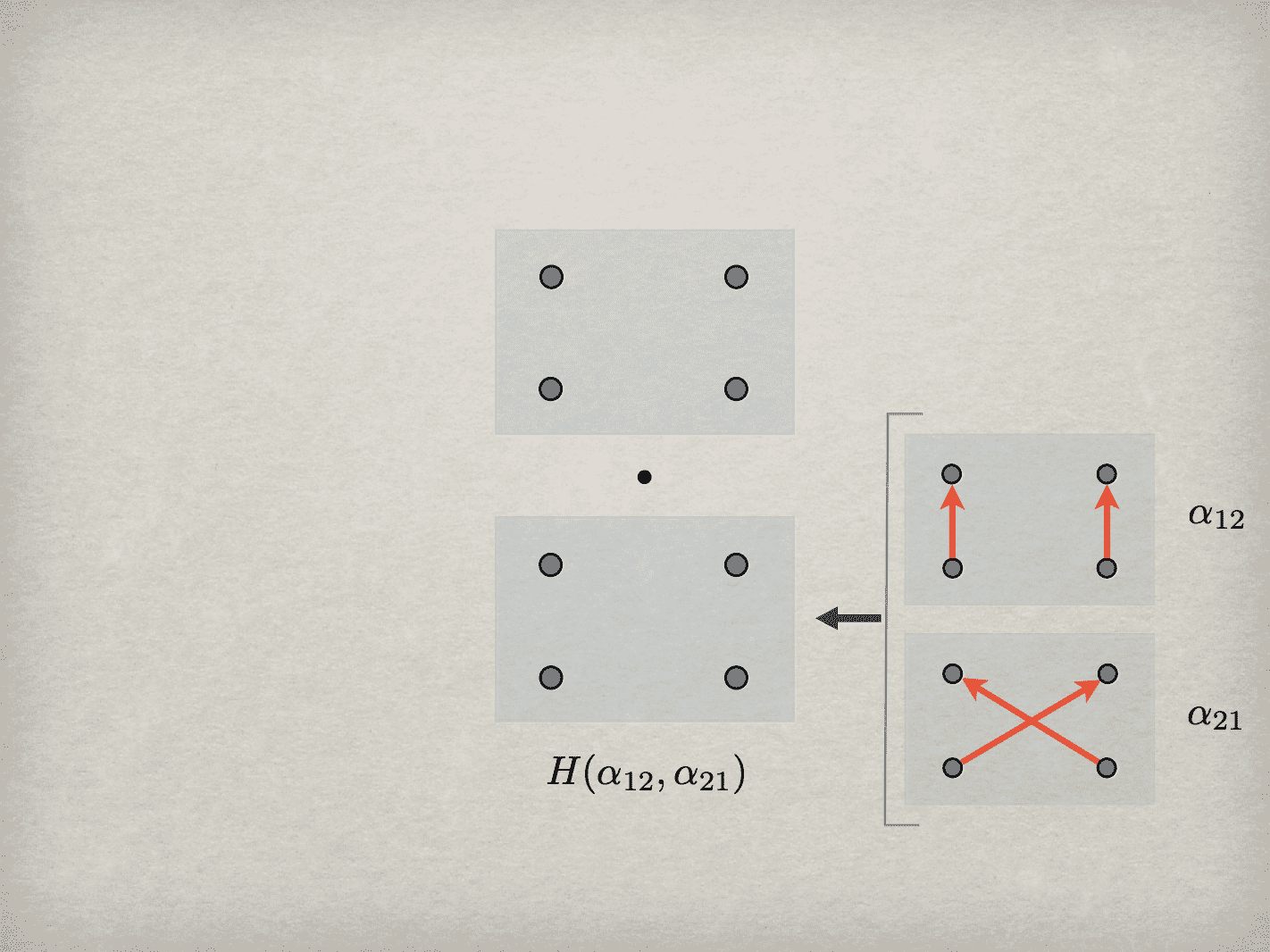

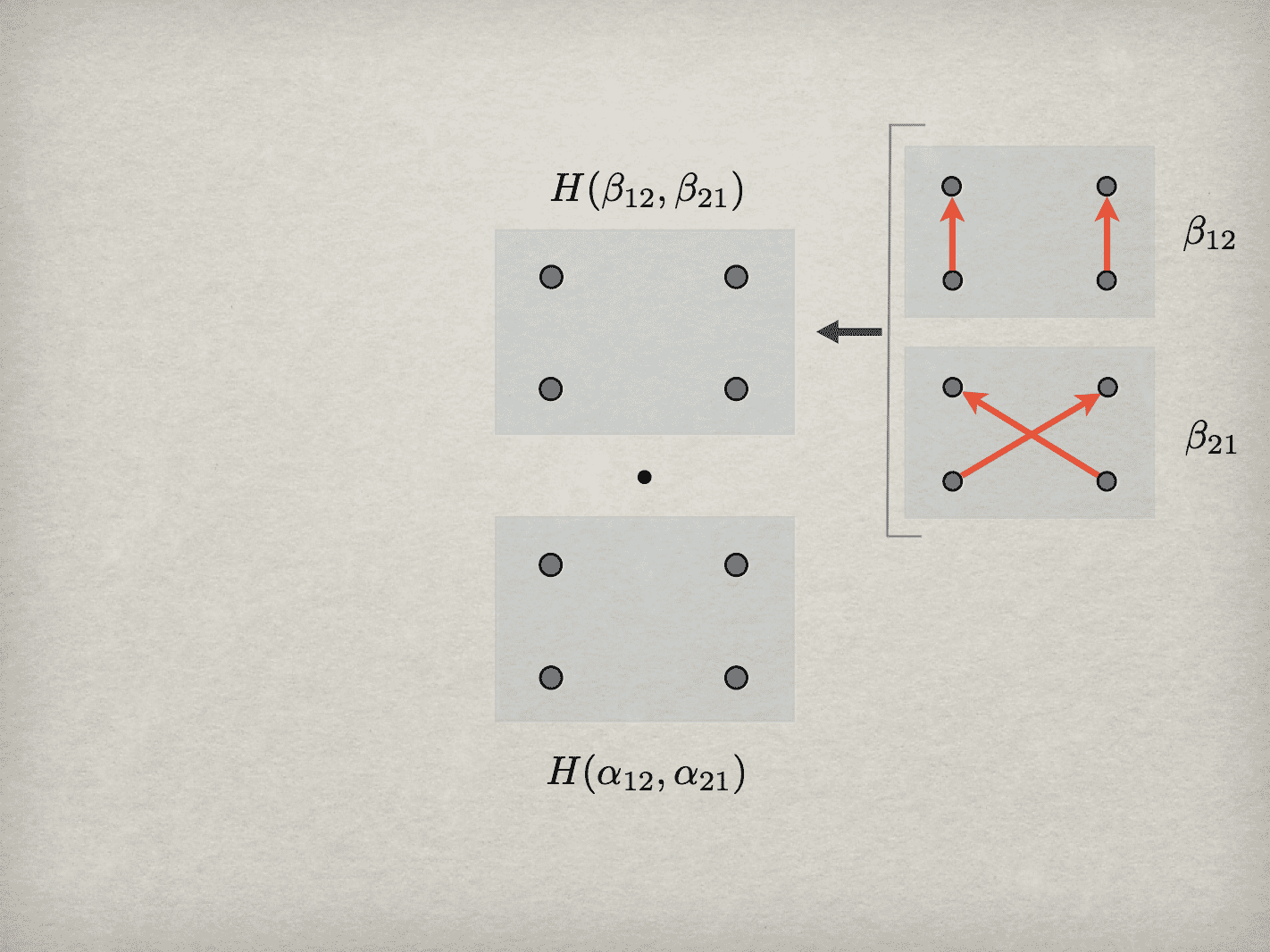

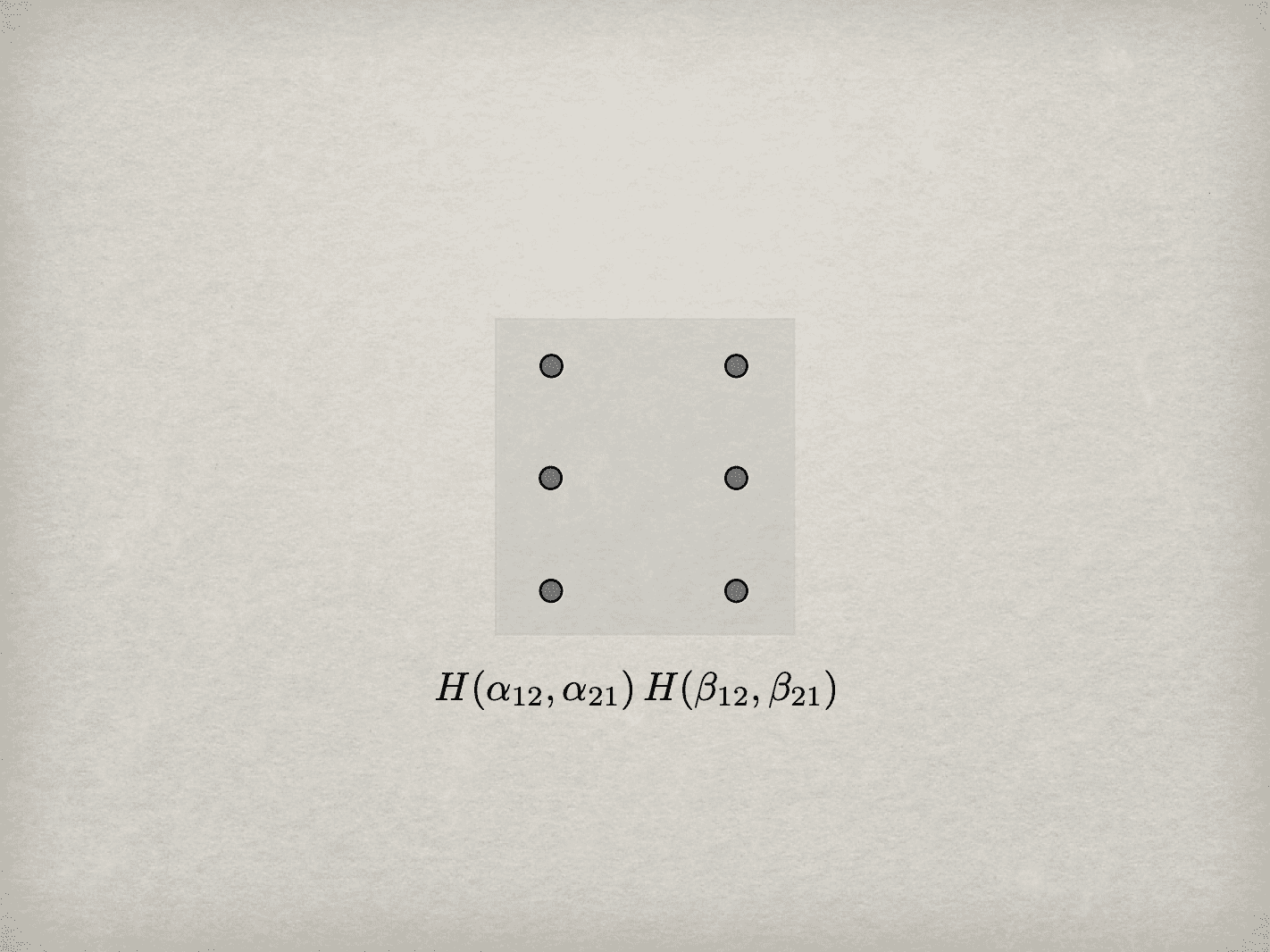

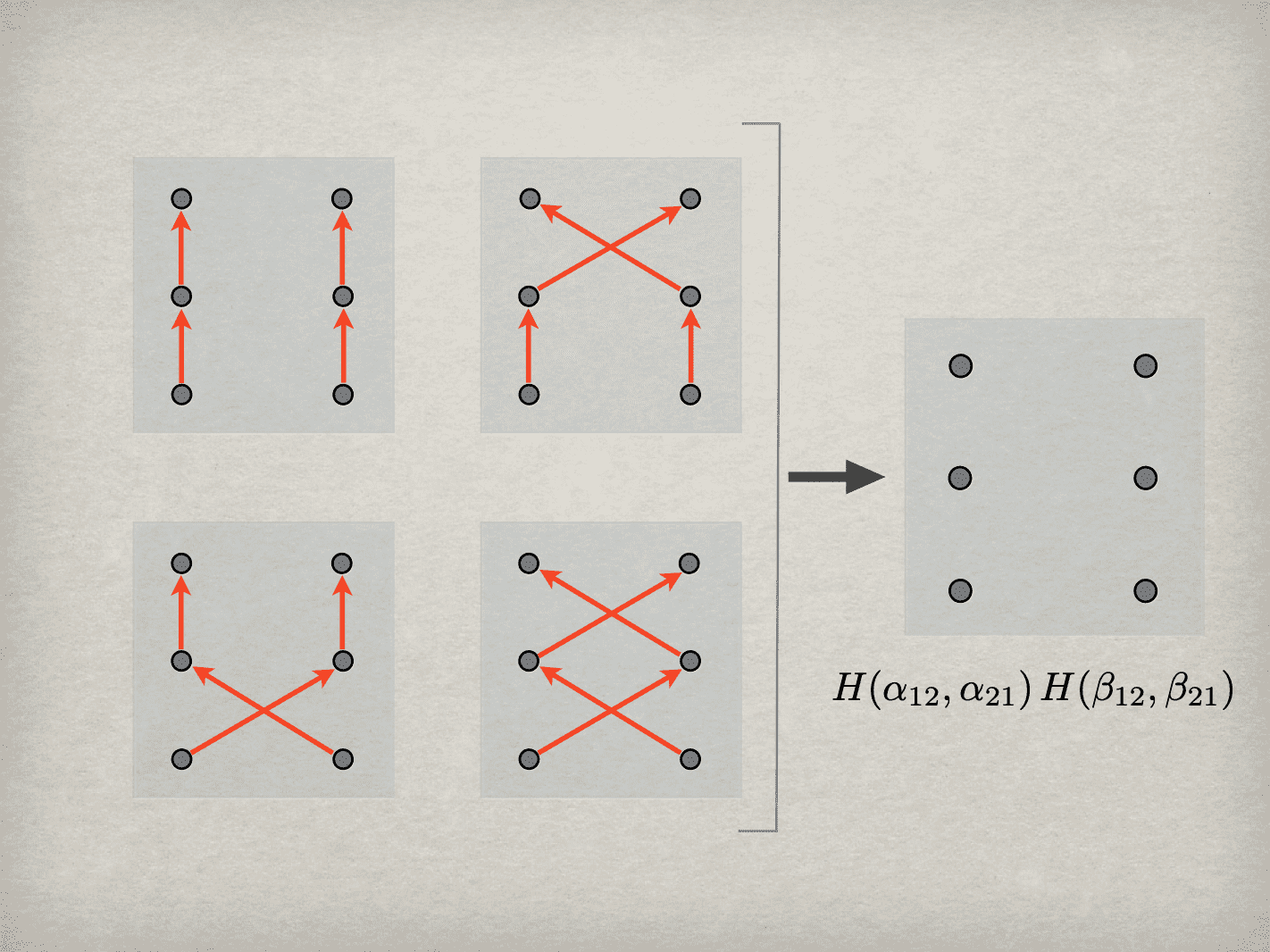

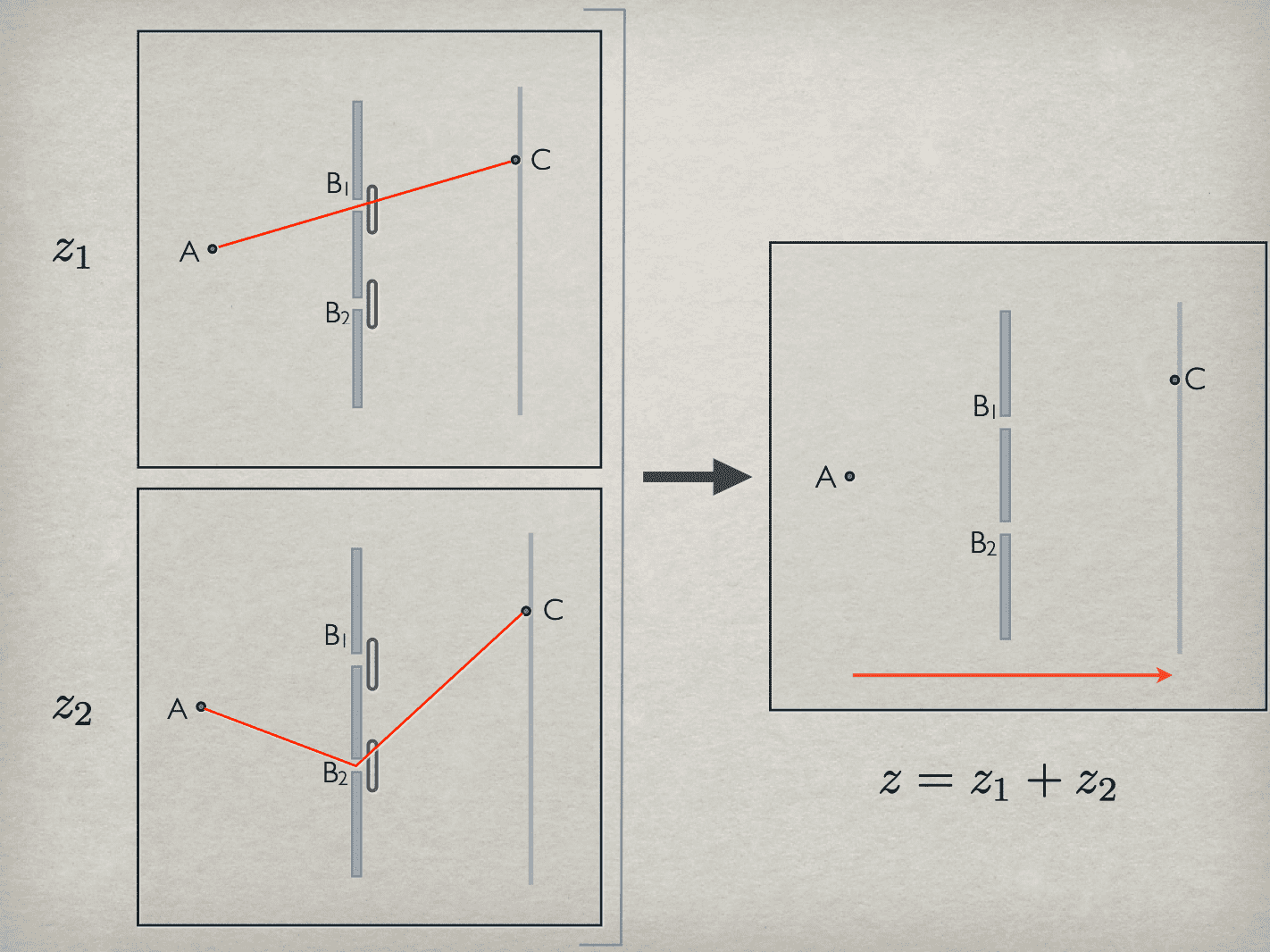

[Philip]: Yeah, so it’s a great question, and it’ll take a little bit to get through this. So as I was saying to Michel, if you restrict quantum field theory to a given, constant number of particles, then you can make a mapping between the quantum theoretic description… quantum mechanical description, of a set of identical particles, and the quantum field theoretic description.

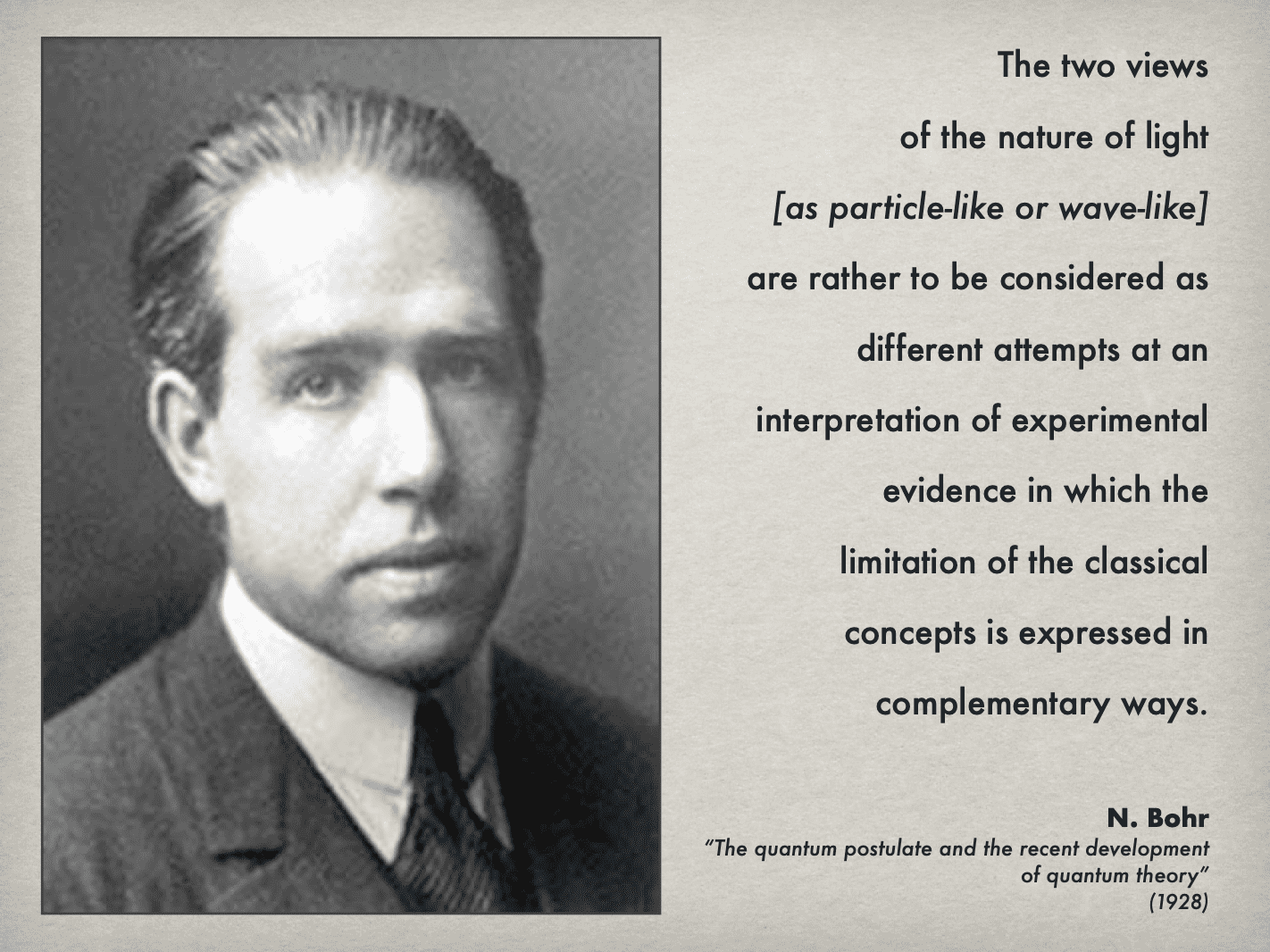

So at that level, the fact that one would be inclined to read this mathematical formalisms differently—the first being interpreted on the basis of the idea of persistence but not re-identifiabilty, the second as nonpersistence—that is really a kind of trick. We’re essentially being fooled, because we’re not being careful enough, in my view.

So what I’m claiming here is that, in both cases you need the idea of persistence and nonpersistence. So that’s the first part of it.

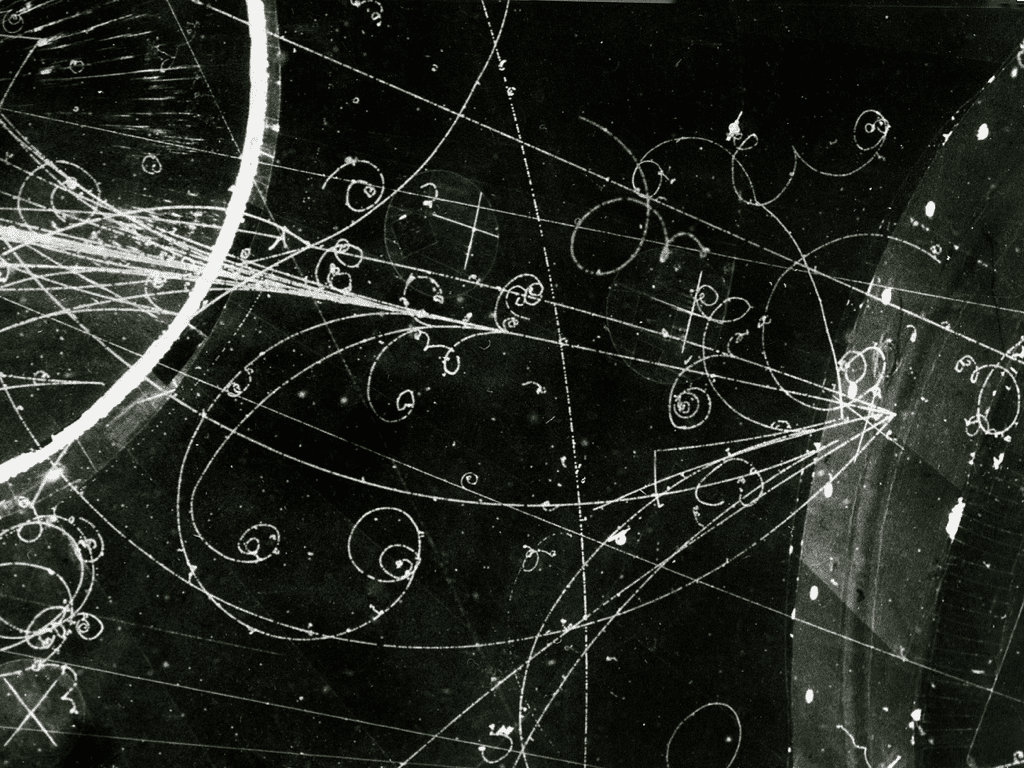

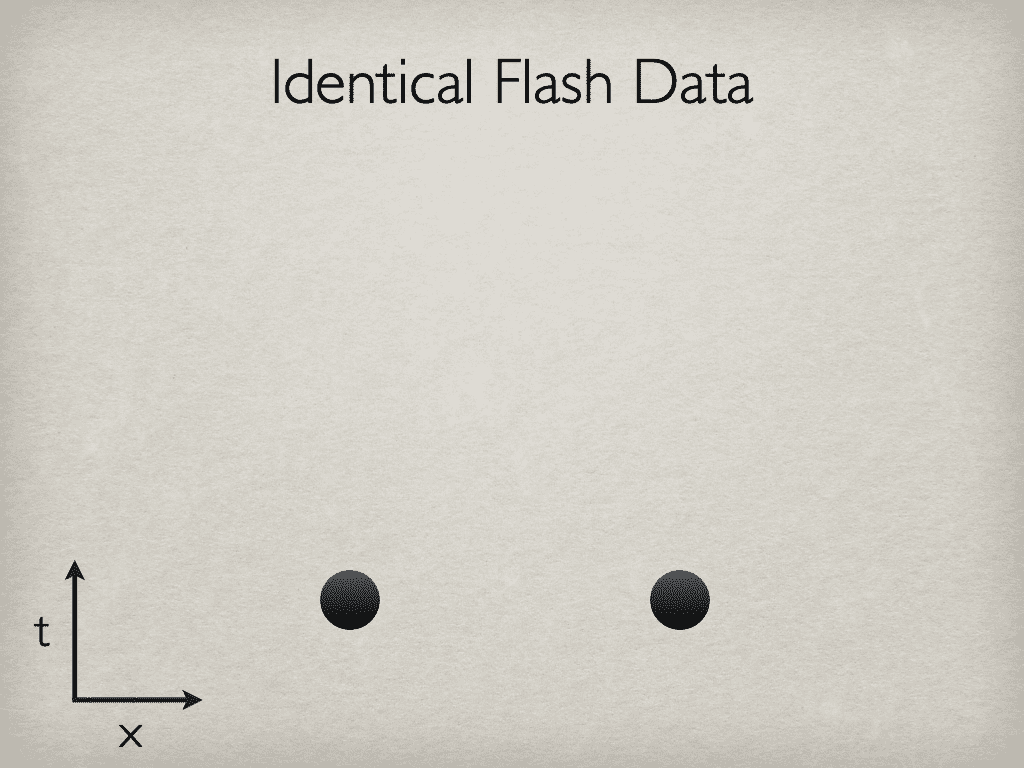

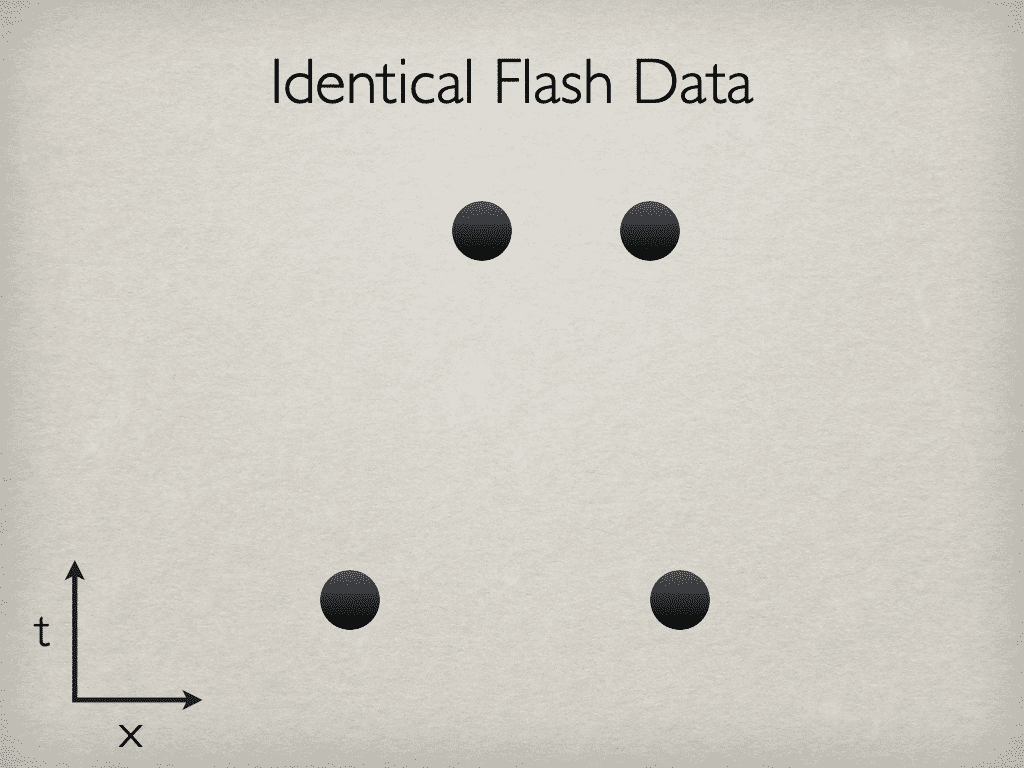

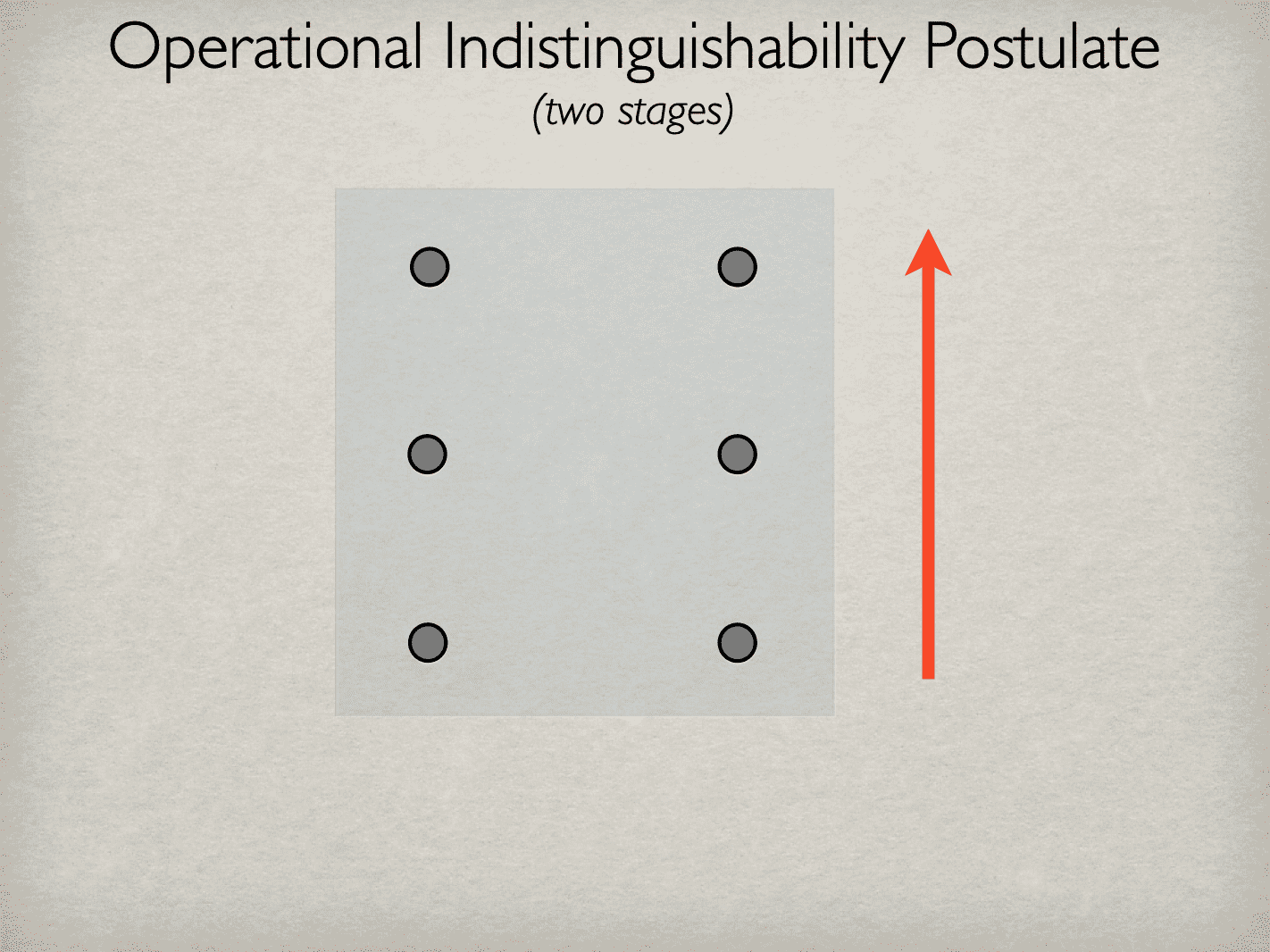

But then I suppose I want to also talk about, the idea of, that what quantum field theory brings in, when you allow the number of quanta to vary… so you could have three flashes and then two, then six.

Right? It can vary. That then, of course, brings in a further layer of complexity. Right, there’s another layer that we’ve introduced into the formalism, the possibility that a number of flashes can vary. That obviously then drives home the idea that these particles aren’t continuously persistent, so that the number, from the particle point of view, is varying.

So that’s an embellishment on top, and that’s something that I do want to elaborate. I want to understand better what conceptual innovation is involved in that step. So it’s very much an open question for me.

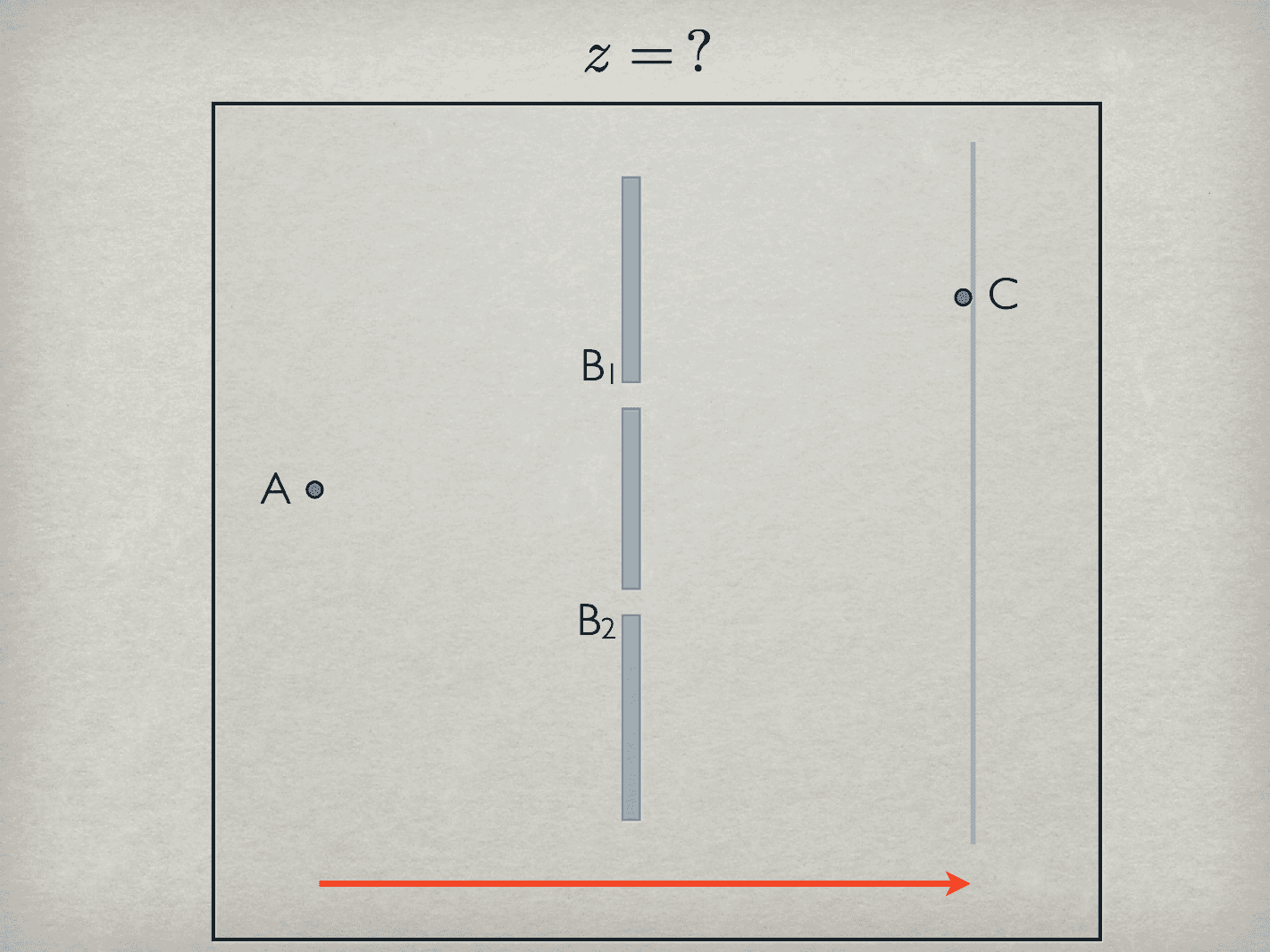

[Vincent]: So when we break a particle down into these two complementary ways of representing them, the position and something more or less looks like momentum, with… direction. What do you make of the fact that these things are related by boosts? So, if we are particularly talking about a single particle, we can always boost into the frame with one of ‘em where’s there’s no directionality.

And is there some kind of pointer to a fully relational description? So, in other words, if we have just the one particle, and one of those things doesn’t really exist. The momentum is kind of, there’s no state of affairs with respect to the momentum. And if we have two, the logical way of thinking about it is that, there’s only some smaller state of affairs, with respect to the relative momenta of the two particles, but not the thing. So is there a way, some way for us to start connecting quantum mechanics up to the special relativity in this particular context?

[Philip]: The simple answer is I don’t know. Those are two very interesting thoughts, and the second one, about the relationalism, is particularly…

The relational ideas have…it feels like have been more directly explored in, for example, shape dynamics, which you mentioned, and it’s been explored in different ways by a number of different people.

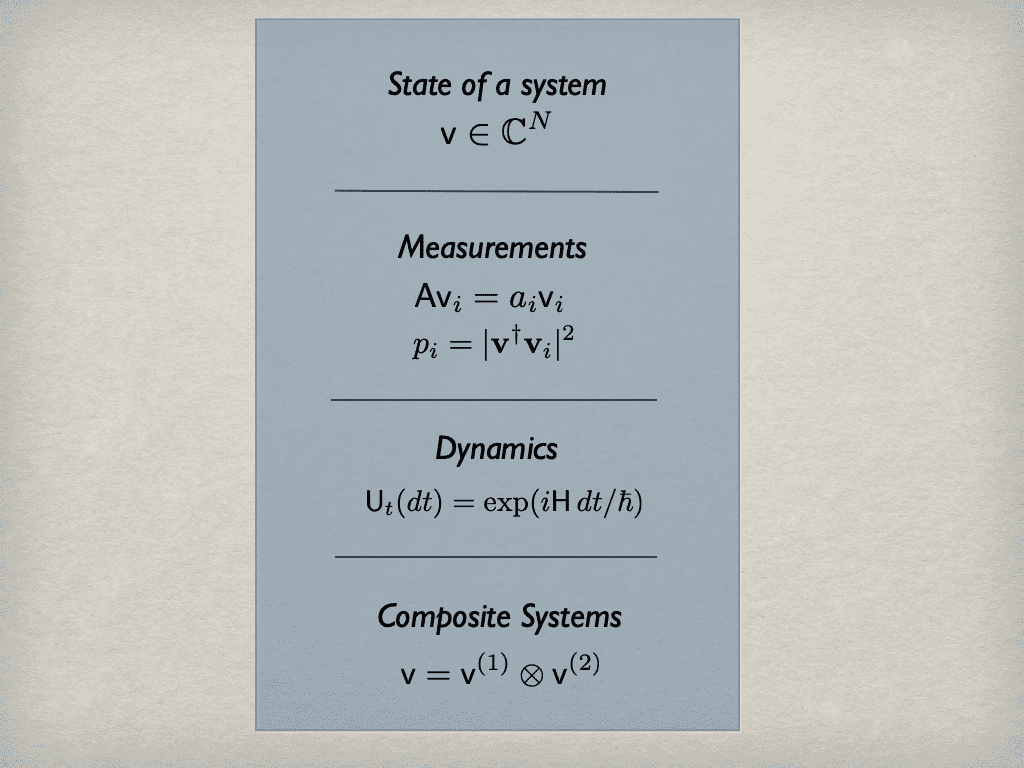

But yeah, in this context… remember the basic focal point, is here the abstract quantum formalism, which is kind of an abstract shell in which we build quantum theories. And at that level—the abstract formalism—there’s no concept of particle in space, with momentum or position. That hasn’t been introduced yet.

So, I think the question that you’re asking, is kind of at the next level, which is to say when you try to actually build explicit quantum theories of stuff in space, then is there any benefit to formulating a relational quantum theory of stuff in space, rather than what we normally do. I don’t really know the answer to that.